I had the pleasure of opening the AI Day in Nouvelle Aquitaine, organized by AI in Nouvelle Aquitaine. For this occasion, I allowed myself to venture into areas close to philosophy (probably remnants from the Philosophia conference and the Citizens’ Convention). I wanted to answer a question that is never asked but may be the reason why AI so desperately needs trust and explainability.

Does AI still belong to digital science?

Obviously, the question is provocative. Of course, AI is part of the digital world, but what I wanted to highlight is: why do we now feel such a strong need for trust and explainability in artificial intelligence? Why not simply use our old recipes (we know how to trust digital systems, we’ve been doing it for decades)? By following this rather simple question, I tried to point out what fundamentally changes with the arrival of generative AI, especially in our relationship to meaning, truth, decision-making, and responsibility.

In short, AI has benefited from the impressive explosion of digital technology — its power and omnipresence in our daily lives. It now represents the main entry point, but it goes further. Generative AI changes the game. Until now, digital technology was a “documentary” tool to better understand, analyze, and reflect the real world. Marked by continuous progress in computing power, storage, and connectivity, generative AI has crossed a threshold: the machine no longer just manipulates symbols, it simulates its own meaning (in a way), and that meaning is foreign to us. Our problem is no longer to match the symbols manipulated by the machine to our reality, but to align a meaning that is foreign to us (the internal representation of AIs) with the meaning we assign. This makes the task much more difficult!

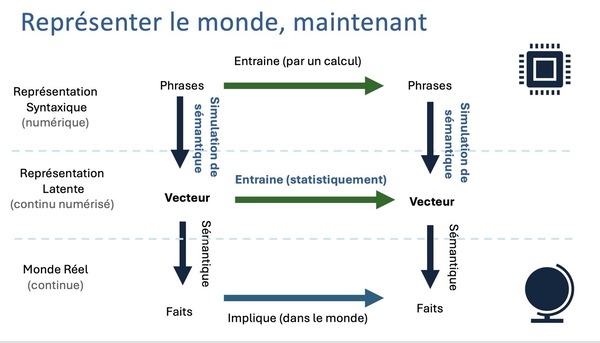

Until now, we could rest easy thanks to what is called the double digital divide: on one hand, a semantic divide, because the machine processes signs without understanding their real meaning — it’s always the human who interprets; on the other, a material divide, because computation is independent of physical reality. Our verification and explanation methods were based on a (symbolic) formal representation of the world, and we “only had to” ensure the adequacy between the produced symbols and their reality, their semantics (which was already very complex). Today, the boundary between syntax (symbols for computation) and semantics seems more fragile to me, due to the use by LLMs of points in huge latent spaces, which do carry semantics (but that’s a philosophical debate in itself).

Our relationship with digital technology must change with AI. The old recipes we developed for digital systems are no longer suitable. It’s no longer just a tool: it’s an actor in the production of knowledge, meaning, and emotional experiences. AI has transformed the digital realm. It is no longer merely documentary.

All this, in conclusion, is to try to understand why it is extremely difficult to understand large language models. Is it even possible?